In this post, Kristie Miller discusses her article recently published in Ergo. The full-length version of Kristie’s article can be found here.

It might seem obvious that we experience the passing of time. Certainly, in some trivial sense we do. It is now late morning. Earlier, it was early morning. It seems to me as though some period of time has elapsed since it was early morning. Indeed, during that period it seemed to me as though time was elapsing, in that I seemed to be located at progressively later times.

One question that arises is this: in what do these seemings consist? One way to put the question is to ask what content our experience has. What state of the world does the experience represent as being the case?

Philosophers disagree about which answer is correct. Some think that time itself passes. In other words, they think that there is a unique set of events that are objectively, metaphysically, and non-perspectivally present, and that which events those are, changes. Other philosophers disagree. They hold that time itself is static; it does not pass, because no events are objectively, metaphysically, and non-perspectivally present, such that which events those are, changes. Rather, whether an event is present is a merely subjective or perspectival matter, to be understood in terms of where the event is located relative to some agent.

Those who claim that time itself passes typically use this claim to explain why we experience it as passing: we experience time as passing because it does. What, though, should we say if we think that time does not pass, but is rather static? You might think that the most natural thing to say would be that we don’t experience time as passing. We don’t represent there being a set of events that are non-perspectivally present, and that which those are, changes. Of course, we represent various events as occurring in a certain temporal order, and as being separated by a certain temporal duration, and we experience ourselves as being located at some times (rather than others) – but none of that involves us representing that some events have a special metaphysical status, and that which events have that status, changes. So, on this view, we have veridical experiences of static time.

Interestingly, however, until quite recently this was not the orthodox view. Instead, the orthodoxy was a view known as passage illusionism. This is the view that although time does not pass, it nevertheless seems to us as though it does. So, we are subject to an illusion in which things seem to us some way that they are not. In my paper I argue against passage illusionism. I consider various ways that the illusionist might try to explain the illusion of time passing, and I argue that none of them is plausible.

The illusionist’s job is quite difficult. First, the illusion in question is pervasive. At all times that we are conscious, it seems to us as though time passes. Second, the illusion is of something that does not exist – it is not an experience which could, in other circumstances, be veridical.

In the psychological sciences, illusions are explained by appealing to cognitive mechanisms that typically function well in representing some feature(s) of our environment. In most conditions, these mechanisms deliver us veridical experiences. In some local environments, however, certain features mislead the mechanism to misrepresent the world, generating an illusion. These kinds of explanation, however, involve illusions that are not pervasive (they occur only in some local environments) and are not of something that does not exist (they are the product of mechanisms that normally deliver veridical experiences). This gives us reason to be hesitant that any explanation of this kind will work for the passage illusionist.

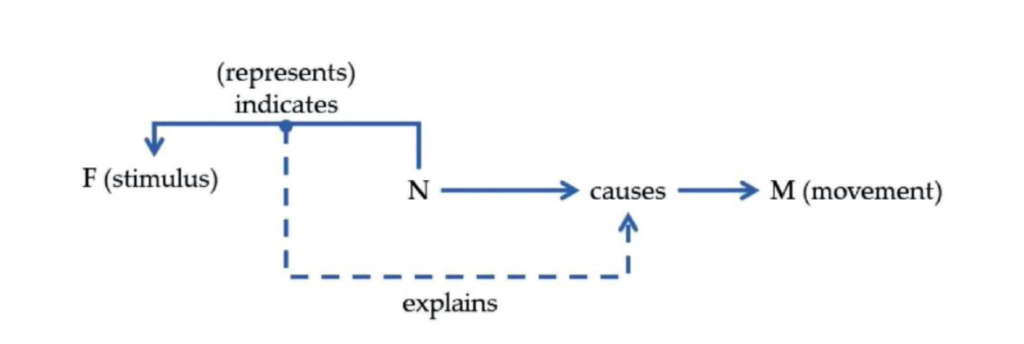

I consider a number of mechanisms that represent aspects of time, including those that represent temporal order, duration, simultaneity, motion and change. I argue that, regardless of how we think about the content of mental states, we should conclude that none of the representational states generated by these mechanisms individually, or jointly, represent time as passing.

First, suppose we think that the content of our experiences is exhausted by the things in the world that those experiences typically co-vary with. For instance, suppose you have a kind of mental state which typically co-varies with the presence of cows. On this view, that mental state represents cows, and nothing more. I argue that if we take this view of representational content, then none of the contents generated by the functioning of the various mechanisms that represent aspects of time, could either severally or, importantly, jointly, represent time as passing. For even if our brains could in some way ‘knit together’ some of these contents into a new percept, such contents don’t have the right features to generate a representation of time passing. For instance, they don’t include a representation of objective, non-perspectival presence. So, if we hold this view on mental content, we should think that passage illusionism is false.

Alternatively, we might think that our mental states do represent the things in the world with which they typically co-vary, but that their content is not exhausted by representing those things. So, the illusionist could argue that we experience passage by representing various temporal features, such that our experiences have not only that content, but also some extra content, and that jointly this generates a representation of temporal passage.

I argue that it is very hard to see why we would come to have experiences with this particular extra content. Representing that certain events are objectively, metaphysically, and non-perspectivally present, and that which event these are, changes, is a very sophisticated representation. If it is not an accurate representation, it’s hard to see why we would come to have it. Further, it seems plausible that the human experience of time is, in this regard, similar to the experience of some non-human animals. Yet it seems unlikely that non-human animals would come to have such sophisticated representations, if the world does not in fact contain passage.

So, I conclude, it is much more likely, if time does not pass, that we have veridical experiences of a static world rather than illusory experiences of a dynamical world.

Want more?

Read the full article at https://journals.publishing.umich.edu/ergo/article/id/2914/.

About the author

Kristie Miller is Professor of Philosophy and Director of the Centre for Time at the University of Sydney. She writes on the nature of time, temporal experience, and persistence, and she also undertakes empirical work in these areas. At the moment, she is mostly focused on the question of whether, assuming we live in a four-dimensional block world, things seem to us just as they are. She has published widely in these areas, including three recent books: “Out of Time” (OUP 2022), “Persistence” (CUP 2022), and “Does Tomorrow Exist?” (Routledge 2023). She has a new book underway on the nature of experience in a block world, which hopefully will be completed by the end of 2024.