In this post, Brandon Smith discusses the article he recently published in Ergo. The full-length version of Brandon’s article can be found here.

Like many of his fellow philosophers in the seventeenth-century, Benedict de Spinoza (1632-1677) was heavily engaged with the views of ancient thinkers. In particular, Spinoza’s philosophy shares many affinities with Stoicism (James 1993; Miller 2015; Pereboom 1994). Both, for example:

- think that God is the universe itself (pantheism) and everything that occurs happens necessarily (determinism);

- conceive of happiness as being rational and virtuous;

- critique passions as flawed judgments about good and bad;

- promote a therapeutic approach to combatting harmful passions through the practice of modifying our value judgments; and

- distinguish between passions and rational emotions.

However, Spinoza also (from his perspective) improves on certain Stoic doctrines by denying that God acts purposefully, acknowledging the goodness of passions in certain contexts, and conceiving of virtue and happiness as both physical and intellectual in nature (DeBrabander 2007; Long 2003; Miller 2015).

Curiously, Spinoza also shares noteworthy affinities with Epicureanism, an important ancient opponent to Stoicism (Bove 1994; Guyau 2020; Lagrée 1994; Vardoulakis 2020). For instance, Spinoza and Epicurus are both committed to:

- a rejection of providence, creation, supernatural phenomena, and the immortality of the soul;

- materialistic (rather than supernatural) explanations of natural phenomena; and

- a pleasure-oriented conception of happiness.

My focus in this paper is on (iii) because I think that pleasure plays a foundational role in both their philosophies and that previous scholarship does not capture the richness and nuance of the agreements and disagreements between them on this subject. Moreover, Spinoza’s agreements with Epicurus on pleasure make it clear that he is neither a dogmatic disciple of nor a mere innovator on Stoicism, despite his common ground with them in many respects. Ultimately, I argue that Spinoza and Epicurus are committed to three central claims which the Stoics reject:

- pleasure holds a necessary connection to being healthy;

- pleasure manifests healthy being through positive changes in state and states of healthy being in themselves;

- pleasure is by nature good.

Epicurus distinguishes between two kinds of pleasures: kinetic and katastematic (DL X.136; OM I.37). Kinetic pleasure represents a change in one’s state of being through the process of satisfying a desire. Necessary kinetic pleasures, like eating, drinking, sleeping, and learning, directly promote our natural functioning or health by removing either pain in the body or disturbance in the mind. Unnecessary kinetic pleasures conversely diversify the expression of healthy being through desires related to preferences (e.g., satisfying my basic need for food through steak or chocolate in particular), activities (e.g., reading or running), or external things (e.g., wealth, marriage, or social approval). Katastematic pleasure is the enjoyment of being healthy in itself through the absence of desire, for example, being satiated, well-rested, and tranquil. Happiness as the highest good consists specifically in katastematic pleasure. According to Epicurus, all pleasures essentially promote healthy being and happiness, and are thus intrinsically good, because they either lead to, constitute, or diversely express, the unimpeded natural functioning of the body or mind. A pleasure can only be bad then insofar as it is pursued in a manner that leads to its destruction by causing pain or disturbance (i.e., impediments to natural functioning), namely through excess or misunderstanding of the hierarchy of value amongst pleasures (LM 128–132; PD VIII, XXIX–XXX).

Spinoza makes a similar distinction between transitional and non-transitional pleasures (E3da2; E5p36s; E5p42). All individuals possess an essential power to express and preserve themselves through bodily and mental activities (E3p6; E4p38–9; E4p26). Transitional pleasures, like eating, drinking, and learning, increase our self-affirmative power. Non-transitional pleasures express our degree of self-affirmative power in itself through bodily activities like sculpting or running and mental activities like scientific understanding of God, nature, and ourselves as human beings and individuals. Blessedness, as the highest happiness and good, consists specifically in the non-transitional pleasure of being as physically and intellectually active as possible. Both kinds of pleasure for Spinoza are however by nature good insofar as they are tied to promoting bodily and mental health in the form of physical and intellectual self-empowerment. Any badness that arises from a pleasure is due to that pleasure being enjoyed in an excessive manner that undermines its self-empowering nature. Insofar as we knowingly pursue pleasure in line with its nature then it can only lead to flourishing (E4p41–4s; E5p10s).

In summary, both Epicurus and Spinoza draw necessary connections between pleasure, health, goodness, and happiness.

Health manifests itself as unimpeded bodily/mental functioning for Epicurus and self-affirmative bodily/mental power for Spinoza. Because health is the shared metric of goodness here and happiness is the ultimate good, both philosophers place a happy life in the joy of healthy being (i.e. natural functioning or self-affirmative power) itself. From this foundation, they distinguish between pleasures as positive health-oriented changes in one’s state of being (kinetic pleasure and transitional pleasure) and pleasures as expressions of healthy being in itself (katastematic pleasure and non-transitional pleasure). It is pleasure’s essential role in promoting bodily and mental health that leads Epicurus and Spinoza to argue that all forms of pleasure are by nature good, and that pleasures can only be bad if enjoyed in a manner that undermines their health-promoting nature as pleasures.

Epicurus and Spinoza, in their own distinctive ways, help us to see the true nature and value of pleasure in our pursuit of flourishing and fulfillment. Epicurus offers us an account of happiness which carries the advantage of being fairly easy to achieve and maintain as the simple enjoyment of physical and mental health, while Spinoza offers us an account which carries the advantage of strongly emphasizing the active connotations of being and living well, in order to encourage us to joyfully express ourselves as fully as possible both physically and intellectually. The ultimate lesson for us is that any harm that comes to us by way of pleasure is the result of our own misunderstanding and misuse of nature’s greatest good and not at all the fault of pleasure (in any of its forms) or our essential desire for it.

References

- Bove, Laurent (1994). “Épicurisme Et Spinozisme: L’éthique.” Archives de Philosophie 57(3): 471–484.

- Cicero, Marcus Tullius (2004). On Moral Ends. Julia Annas (Ed.) and Raphael Woolf (Trans.). Cambridge University Press. [OM]

- Epicurus (1994). “Letter to Menoeceus”. In Brad Inwood and L.P. Gerson (Eds. and Trans.), The Epicurus Reader: Selected Writings and Testimonia (28–31). Hackett. [LM]

- Epicurus (1994). “Principal Doctrines”. In Brad Inwood and L.P. Gerson (Eds. and Trans.), The Epicurus Reader: Selected Writings and Testimonia (32–36). Hackett. [PD]

- Guyau, Jean-Marie (2020). Spinoza: A Synthesis of Epicureanism and Stoicism. Frederico Testa (Trans.). Parrhesia, 32, 33–44.

- James, Susan (1993). “Spinoza the Stoic”. In Tom Sorrell (Ed.), The Rise of Modern Philosophy: The Tensions between the New and Traditional Philosophies from Machiavelli to Leibniz (289–316). Oxford University Press.

- Lagrée, Jacqueline (1994). “Spinoza “Athée & Épicurien””. Archives de Philosophie 57(3): 541–558.

- Long, A. A. (2003). “Stoicism in the Philosophical Tradition”. In Jon Miller and Brad Inwood (Eds.), Hellenistic and Early Modern Philosophy (7–29). Cambridge University Press.

- Miller, Jon (2015). Spinoza and the Stoics. Cambridge University Press.

- Pereboom, Derk (1994). Stoic Psychotherapy in Descartes and Spinoza. Faith and Philosophy, 11(4), 592–625.

- Spinoza, Benedict de (2002). Ethics. In Michael L. Morgan (Ed.) and Samuel Shirley (Trans.), Spinoza: Complete Works (213–282). Hackett. [E] [da = definitions of the affects/emotions; p = proposition; s = scholium]

- Vardoulakis, Dmitris (2020). Spinoza, The Epicurean: Authority and Utility in Materialism. Edinburgh University Press.

Want more?

Read the full article at https://journals.publishing.umich.edu/ergo/article/id/6156/.

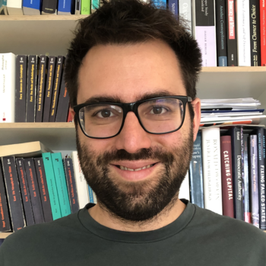

About the author

Brandon Smith is a FRQSC Postdoctoral Fellow at the University of Wisconsin-Madison’s Institute for Research in the Humanities. His research interests include Spinoza, 17th century philosophy, ancient Greek philosophy, ethics, and philosophy of happiness. He is in in the process of turning his dissertation into a book, The Search for Mind-Body Flourishing in Spinoza’s Eudaimonism, which explores Spinoza’s engagement with Aristotle, Epicurus, and the Stoics on the roles of pleasure, virtue, mind, and body in living a happy, flourishing life.